Appendix A: Data & Methodology

This section details the background and makeup of the two-year survey data set centered on industry professionals, executive interviews, and content analysis. The analytical framework answers a three-part question: Are there groupings that can explain differences in SCS measures, how have SCS practices changed from 2019 to 2020, and what is the impact of Covid-19 on SCS commitments? First, we describe the background and state of our survey data and provide an overview of data cleaning and segmentation processes. Next, we describe the imputation techniques used to address missing data. Finally, we introduce other analysis approaches such as k-means clustering for company types, correlated based groupings for practices clusters, ANOVA analysis, then conclude with interviews and content analysis.

Survey Data Framework

The survey questions were developed after careful consideration of existing research on supply chain sustainability, input from supply chain experts at MIT CTL, CSCMP, and other organizations, and the growing corpus of corporate sustainability reports. The questions were then tested and validated by supply chain professionals to assess their effectiveness and usefulness in a real-world setting. For the 2020 survey, modifications to the question format, arrangement, and answer choices were made based on an analysis of the survey, [75] team experience, and feedback from external partners. Both the 2019 and 2020 surveys were conducted between October and November and advertised in multiple media outlets with a primary focus on network email lists and LinkedIn outreach.

Data Mapping

Given that the 2021 report survey is a direct evolution of the 2020 survey, there are similarities and differences that need to be identified to properly compare both years’ results. The layout of both surveys is similar, and both include questions using a Likert scale (1–5 with greater intensity) and questions that are heavily categorical. As seen in Table 3, the 2019 survey data was mapped to 2020 data for comparison between the two years. If multiple answer choices were mapped to one answer, we used the average of the Likert responses. If a question was added or subtracted, such as the addition of questions specific to Covid-19, it was only analyzed within the context of that year.

Changes were also made to some of the demographic questions. In the 2019 survey, we asked respondents to provide the country of their company headquarters, but for 2020, we asked for the continent. The 2020 survey also allowed individuals to select multiple locations. For comparative analysis, a single location was decided based on survey size for each continent.

Data Imputation

-

- Class 1: For parent SL questions with an answer of “yes” but blank responses for child SL questions, we can reasonably conclude they intended to select “no” to the missing topics and so used a Likert scale choice of 1.

- Class 2: For parent SL questions with a definitive answer of “no”, we directly imputed their corresponding child SL answers to “no” which corresponds to the lowest available Likert scale choice of 1.

- Class 3: For parent SL questions with an answer of “not sure” or “skip”, it is much harder to determine the correct course of action for the missing data, as we do not have a clear indicator for how they would have responded.

Clustering

-

- Phase 1: k-Nearest Neighbors (KNN) imputation for missing parameters and k-means clustering (k = 3 and 4) with each dataset separately

- Phase 2: KNN imputation for missing parameters and k-means (k = 5) on 2020 survey data then predicted cluster class for 2019 data

- Phase 3: Listwise deletion of missing parameters and k-means (k = 6) clustering on the combined 2019 and 2020 survey data

Table 4: Parent-child SL imputation for clustering analysis.

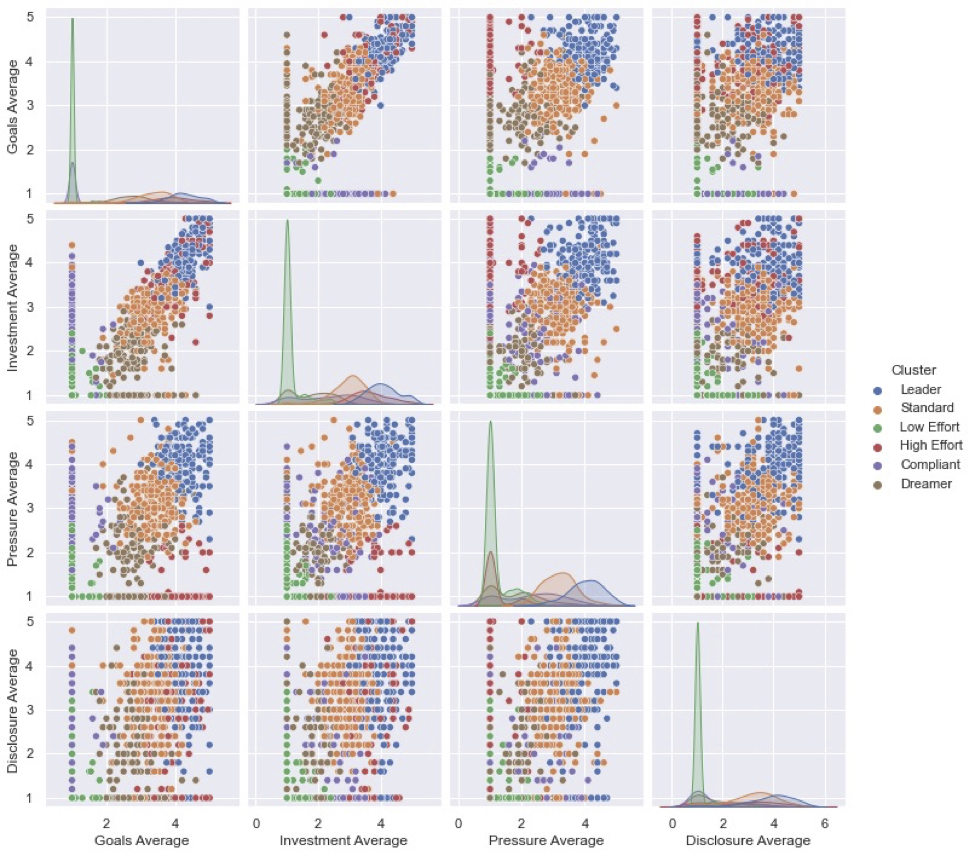

Figure 23: Graphical representation of the 6 clusters and how they formed across different the four main framework areas. N = 1,557.

In Phase 1, k-means (k = 3) clustering was conducted on the child SL answers using the Likert scaled data from the 2019 and 2020 surveys separately. An outlier cluster was identified reflecting responses with missing data. After evaluation and analysis, we then re-ran clustering with a (k = 4) on the data set excluding an outlier cluster identified in Phase 1. In Phase 2, we trained k-means clustering with k = 5 on the 2020 dataset (excluding the Phase 1 outlier class) and then predicted the cluster class for the 2019 dataset (excluding the Phase 1 outlier class). Lastly, in Phase 3, we used listwise deletion—instead of the outlier class—removing the missing data and only clustering on complete responses. Although the sample size for Phase 3 was smaller, the results were very similar to those of the Phase 2 approach. Given the similarity in results, we decided to proceed with the Phase 3 approach. A scree plot confirmed that 6 was an appropriate number of groupings to use.

Full results of the clustering typology model and firm classification can be seen in Figure 23 and Table 5.

Practice Groupings

Table 5: Full heatmap results of the two years for the clustering exercise for firm behavior across the SCS components. Summarized in Table 2 in the Typology section with firm characteristics.

Descriptive Analysis

To better understand the current state of supply chain sustainability and help quantify what changed from the prior year, we conducted a descriptive analysis and summary of the survey results using data visualizations. This approach also allowed us to use data with missing parameters.

We tested Class 1 and Class 2 imputations in our visualizations. Given the skewed nature of the Class 2 results and a desire to match last year’s report we ultimately settled on hybrid approach (see Table 6). The visualizations kept the Class 1 imputation, which fill the blank child responses with “no” if the parent response was initially “yes” and left the remaining answers blank matching last year’s visualizations.

Goals vs. Investment Statistical Analysis

Non-Parametric Analysis of Covid-19 Questions

In the 2020 survey, a new group of questions related to Covid-19 were added, including a section asking respondents whether their firm’s commitments to supply chain sustainably has changed due to pandemic. We then tested the hypothesis that some industries will reduce sustainability commitments with an analysis of variance (ANOVA).

With the industry groups set as the independent variable, we used the Kruskal-Wallis test (one-way ANOVA on ranks) to identify whether there are statistically significant differences among these industry groups and their Covid-19 commitment.

To analyze changes with other SCS measures between 2019 and 2020, we ran multiple nonparametric independent sample t-tests (Mann–Whitney U tests). These tests compared changes the 2019 and 2020 surveys goals, disclosures, pressure, and investments measures.

Regression Analysis for Pressure Sources

To understand the effect of pressure sources on commitments, we modeled the level of commitment in each area focus area as a function of the level of pressure received (see Table 7). Since we are working with Likert scale data, we used an ordinal logistic regression model. Elastic net regularization was used for variable selection.

For each target variable (for commitment level in each focus area), we first perform ordinal logistic regression with an elastic net penalty, using all the pressure sources as regressors. This is the variable selection step, after which we choose only those pressure sources which have nonzero coefficients. We then run the regression on the commitment level using only the hosen subset of pressure sources as regressors and report the coefficients and their corresponding p-values.

Table 7: See summarized results in Table 1 in the Pressure section. Pressure sources with statistically significant regression coefficients for goal setting by focus area. N = 1,557.

Qualitative and Content Analysis

Executive Interviews

Twenty executives were selectively sampled based on an existing network to represent a range of industries (see Table 8). Each executive was asked the same set of questions via phone/web interview or email, and the questions were shared in advance of the interview. The interviews were analyzed for (1) key insights that either supported or contrasted the survey and content analysis findings; and (2) themes that emerged across the interviews. Questions included the range of content as covered in the survey inclusive of pressure, commitment, investment, disclosure, Covid-19, practices, and future outlook.

-

- How important is SCS in your industry? How might this change in this next five years?

- Do you think the pressure has increased for companies to pursue SC sustainability? Recently, in the last five or ten years, or not at all? Please explain your answer.

- What role do SC professionals generally play in pursuing sustainability? How can they make a difference in this space?

- In your industry, what distinguishes the most progressive companies in terms of their SC sustainability programs?

- Which areas of SC sustainability—e.g., labor, emissions, waste, water use—are afforded the highest priority in your company and industry?

- What are the biggest barriers to supply chain sustainability success and the practices that are the hardest to implement in your industry and company?

-

- Are there emerging technologies that you feel will play a role in enabling SC sustainability? If so, what are those technologies?

- How has Covid-19 impacted SC sustainability programs in your industry and company?

Published Content

To complement learnings from the survey and executive interviews, we reviewed an extensive selection of relevant documents. Over the course of 2019 and 2020, more than 300 documents were reviewed, including 75+ corporate social responsibility and sustainability reports, 100+ news articles, 75+ journal articles and research reports, and 25+ industry reports. News sources and relevant journal articles were collected using key phrases related to our research, such as “carbon emissions”, “supply chain management”, “sustainability”, and “child labor”, to identify relevant documents in aggregate news sources such as Factiva and Google News. A representative sample of industry CSR reports were selectively sampled. Industry reports were reviewed from key universities, organizations, and industry associations in the field. The content was organized into a timeline, based on relevance to the different survey metrics, and visualized as treemap diagram. The treemap diagrams were created using 400 news articles published in 2020. Articles were identified using Google News search with the top 400 articles extracted based on the Google News search relevance setting. Noun and adjective words were extracted from the title and text snippet Google News generates for each article. The search term, and misclassified or filler words (e.g., “such” and “other”) were removed. The treemap diagrams are a plot of the relative word frequency within the articles A treemap diagram illustrating word frequency when searching for “supply chain” articles can be seen in Figure 24.

Table 8: Industry makeup of interviewees. N = 21.

Figure 24: A treemap diagram illustrating the frequency of words found in the top 400 articles extracted from Google News when searching “supply chain” in 2020. N = 400.

Appendix B: Limitations

This section will detail the general limitations of our analysis and the key issues we encountered in our data cleaning process.

Responder Bias & Self Selection Responses

Survey Representation

Missing Data

Manual Entries

Questions regarding company headquarters location, department affiliation, and industry type allowed respondents to manually enter an answer. To include this data in our analysis, a decision had to made to recategorize these entries into a parent category. Given the ambiguity of some manual entries a best guess effort was made that may not be fully representative of the intent of the original response.